Map Reduce: File compression and Processing cost

Posted by sranka on August 19, 2015

Recently while working with a customer we ran into an interesting situation concerning file compression and processing time. For a system like Hadoop, file compression has been always a good way to save on space, especially when Hadoop replicates the data multiple times.

All Hadoop compression algorithms exhibit a space/time trade-off: faster compression and decompression speeds usually come at the expense of space savings. For more details about how compression is used, see https://documentation.altiscale.com/when-and-why-to-use-compression. There are many file compression formats, but below we only mention some of the commonly used compression methods in Hadoop.

The type of compression plays an important role — the true power of MapReduce is realized when input can be split, and not all compression formats are splittable, resulting in an unexpected number of map tasks.

In the case of splittable formats, the number of mappers will correspond to number of block-sized chunks into which the file has been stored, whereas in case of a non-splittable format a single map task will process all the blocks. Although the table above shows that LZO is not splittable, in fact it is possible to index LZO files so that performance can be greatly improved. At Altiscale, our experience has shown that by indexing LZO format files, it will make LZO-compressed files splittable and you can gain in performance. For more information, see https://documentation.altiscale.com/compressing-and-indexing-your-data-with-lzo on how to do it.

| Format | Codec | Extension | Splittable | Hadoop | HDInsight |

| DEFLATE | org.apache.hadoop.io.compress.DefaultCodec | .deflate | N | Y | Y |

| Gzip | org.apache.hadoop.io.compress.GzipCodec | .gz | N | Y | Y |

| Bzip2 | org.apache.hadoop.io.compress.BZip2Codec | .bz2 | Y | Y | Y |

| LZO | com.hadoop.compression.lzo.LzopCodec | .lzo | N | Y | N |

| LZ4 | org.apache.hadoop.io.compress.Lz4Codec | .Lz4 | N | Y | N |

| Snappy | org.apache.hadoop.io.compress.SnappyCodec | .Snappy | N | Y | N |

To measure the performance and processing costs for the compression method, we did a simple wordcount example on Altiscale’s in-house production cluster by following the steps mentioned in the below link. Although there are other more detailed methodologies to measure performance, we choose this example just to demonstrate the benefit and performance tradeoff of a splittable solution:

https://documentation.altiscale.com/wordcount-example

The following table represents the results.

| # | File Name | Compression Option | size

(GB) |

Comments | # Of

Maps |

# Of

Reducers |

Processing Time |

| 1 | input.txt | n/a | 5.9 | No Compression | 24 | 1 | 1min 16sec |

| 2 | input.txt.gz | default | 1.46 | Normal Gzip compression | 1 | 1 | 11min 19sec |

| 3 | input1.txt.gz | -1 | 1.42 | gzip with -1 option, means optimize for speed | 1 | 1 | 11min 41sec |

| 4 | input9.txt.gz | -9 | 1.14 | gzip with -9 option, means optimize for space | 1 | 1 | 11min 21sec |

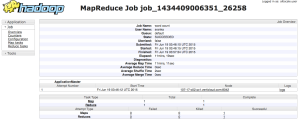

The following shows how you can use the Resource Manager Web UI to find the relevant job statistics that show the processing time as well as the number of mappers and reducers that were used.

Conclusion

In our test #1 scenario the uncompressed file size was 5.9 GB when stored in HDFS. With a HDFS block size of 256 MB, the file was stored as ~24 blocks, and a MapReduce job using this file as input created 24 input splits, each processed independently as input to a separate map task taking only 1 min 16 sec.

In the rest of the test scenarios, due to gzip, the file could not be split, resulting in a single input split and taking an average time of approximately 11 min. Even the gzip -1 option, meant for optimize speed, or -9 option, meant for optimize space, did not help much.

Gzip compression is an important aspect of the Hadoop ecosystem; it helps save space at a trade off of processing time. If the data processing is time sensitive, then a splittable compression format, or even uncompressed files would be recommended.

Catch Up | Oracle Business Intelligence said

[…] 1. Map Reduce: File compression and Processing cost […]